Beyond getting visitors to your site, you have the crucial task of converting them into engaged customers—whether they sign up for a newsletter, complete a contact form, or make a purchase. A/B testing, also called experimentation, is an important tool to maximize the value of your organic (and paid) traffic and grow your business. But do it wrong, and you’ll hurt your conversions and SEO. Let’s avoid that!

What is A/B testing?

In short, A/B testing helps you find the truth of whether you made the correct decisions. It gives you certainty and can verify you’re a smart person making smart decisions. A is your baseline, or in other words, what your website looks like before you change anything. And B is your variation to A, so a changed button, text, or anything. You will then test to see which one works best.

So it’s about the truth, and it’s dangerous to do it wrong. Oh, and I forgot that it’s easy to make mistakes. This all sounds very vague. Let’s start with an example of an experiment:

A/B test example

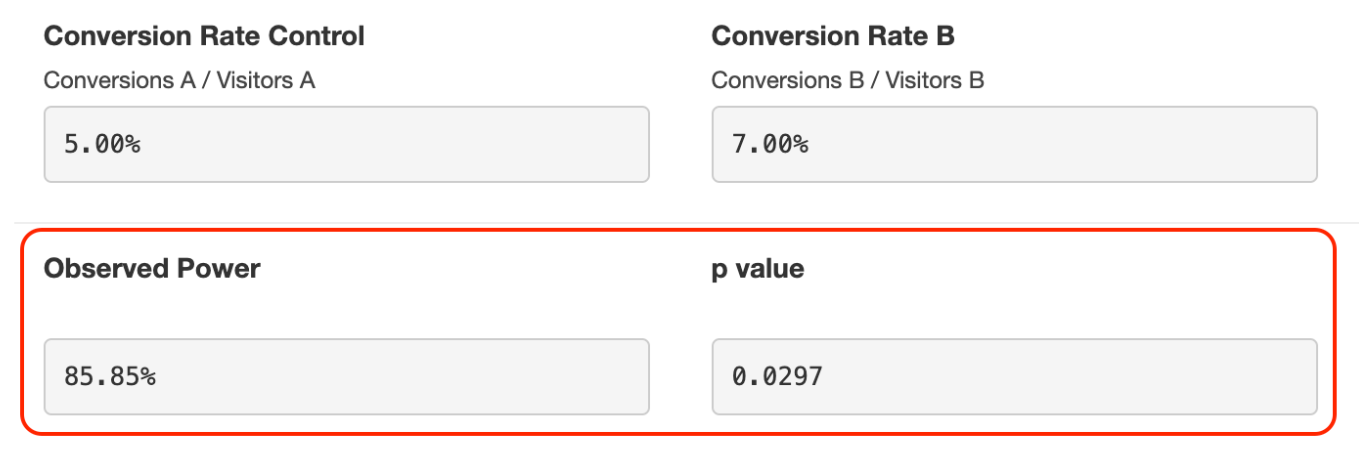

“Let’s say you have an online store selling flip-flops. Your product page gets 1000 monthly visits, but only 50 persons buy a pair (5% conversion rate). That needs to be improved! You read online that adding reviews is a best practice, so you decide to add one. Although a review can improve conversion, it may not be so in this case. Do you take a chance or test? Test, of course! So what happens next?

There are two groups:

- Control group: the original checkout without the review (5% conversion rate).

- Variant group: the modified checkout with the review.

With an A/B testing tool, you randomly show half your website visitors the original checkout and the other half the variant with the review. With the same A/B tool, you track the number of visitors and flip-flops you sell for each group. You will find the following:

- Control group: 50 purchases out of 1000 visitors (5% conversion rate)

- Variant: 70 purchases out of 1000 visitors (7% conversion rate).

You check if the increased conversion rate happened because of the change or if it was chance by using an A/B test calculator (more about this later). Based on these results, you can declare your variant a winner. Hooray! You can safely implement the review to improve the conversion rate on the checkout.”

The benefits of A/B testing

Now that you know what A/B testing is, why would you jump through hoops and spend time and money on it? Isn’t making decisions based on expertise or a gut feeling easier?

Reducing risk

A/B testing is like a safety net for your business decisions. Instead of relying on gut feelings or assumptions, it gives evidence of what resonates with your audience, allowing you to move forward confidently and minimize the risk of negative outcomes.

Learning

Every experiment you run teaches you something about your audience’s preferences and behaviors. In the example above (adding a review on the checkout), you learned that this review increases conversion. But why does this review convince users? Can you use that message on product pages? Your socials? Testing is a continuous learning process that empowers you to make better, more informed decisions. You learn what works best for your audience and business.

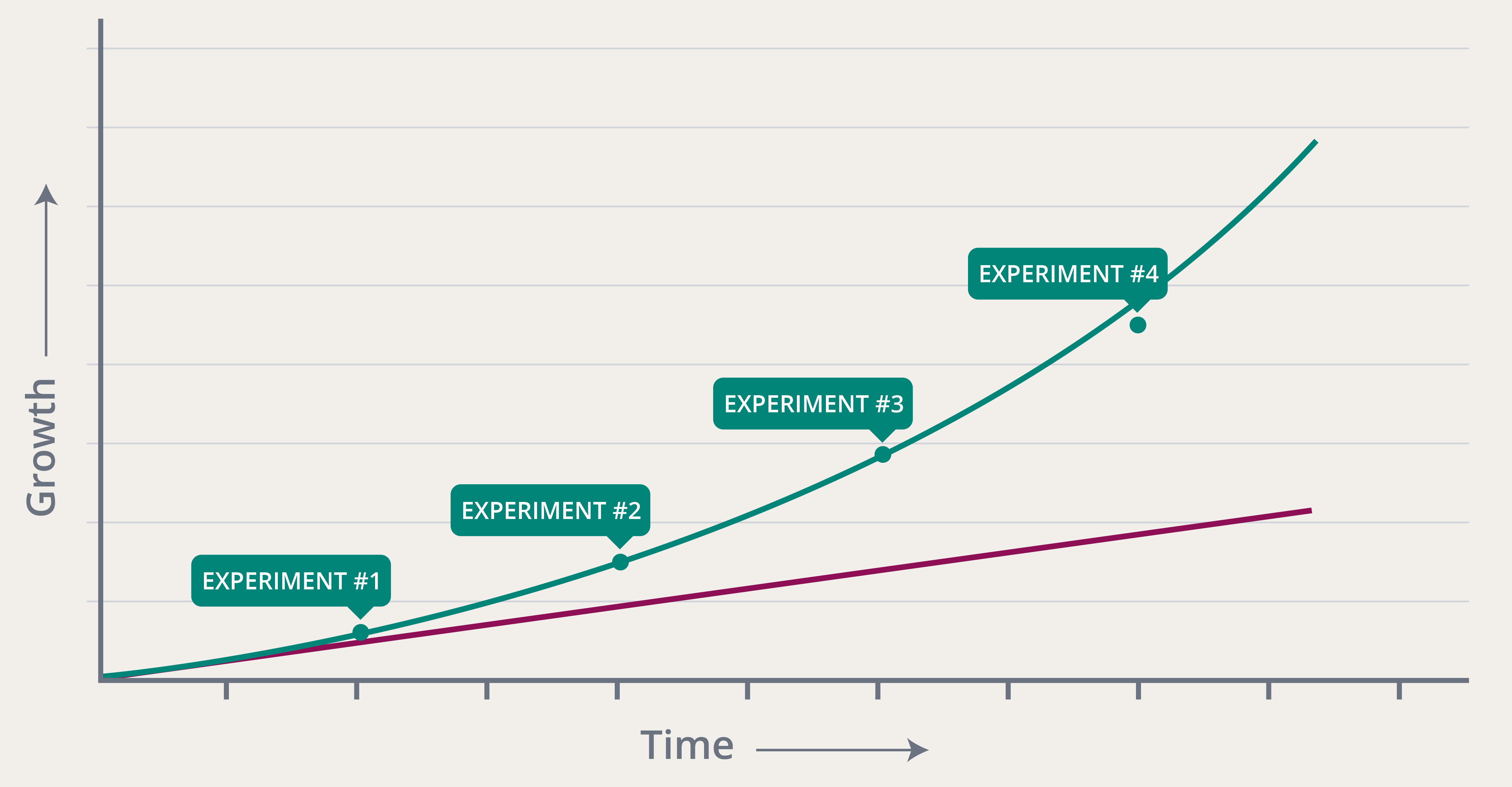

Money!

If you have stocks or a savings account, you might’ve heard of the term ‘compound interest.’ As explained by Online Dialogue compound interest is like a snowball running downhill. Every time your conversion rate increases after an experiment, it adds extra revenue. More importantly, you’re not decreasing your conversion rate and slowing down your business. You get your business rolling like that snowball by repeatedly making the right decisions.

Here’s an example:

- Your current conversion rate is 5% (50 purchases from 1000 site visitors)

- With an increase of 10% conversion rate, you add 5 purchases.

- Another increase of 10%, you add 5.5 purchases.

- Another increase of 10%, you add 6.05 purchases.

- And so on.

You’re benefiting more and more from the same conversion rate uplift.

Can I run A/B tests on my website?

Not everyone can run A/B tests on their websites, or at least not on all pages. There are three key elements you need for testing:

- Enough site visitors (either organic and/or paid).

- Enough conversions (in the broadest sense, i.e., purchases, signups, form completions, clicks).

- Clean data: you need to know that your tracking tool is correct. If your tracking tool shows fifty purchases while, in reality, it was forty, you will draw the wrong conclusions.

So, what is ‘enough’ regarding site visitors or conversions? That depends. If you only have a few site visitors but many purchase flip-flops, you might be able to run tests and vice versa. You can check this by measuring the ‘Minimum Detectable Effect’ (MDE).

This is the minimum conversion rate change to prove that your adjustment caused the increase or decrease.

Follow these steps:

- Look up your current page visitors and conversions and add these to the “Visitors A” and “Conversions A” fields in the A/B test calculator. (Example: 1000 visitors and 50 conversions).

- Add the same number of visitors in the “Visitors B” field and play around with the number of conversions. Is it significant for 60 conversions? 70? 65?

- Find the minimum conversion rate increase and assess whether that is realistic. The benchmark is 10% or lower. Experience shows that achieving a more than 10% increase is very difficult.

Which type of conversions to measure the Minimum Detectable Effect (MDE)?

What should you use to determine the Minimum Detectable Effect (MDE)? Always use your primary business conversion first, like purchases, form completions, or meetings booked. If you don’t have enough of those, you can also test with secondary conversions, like clicks to checkout.

Important: Don’t use ‘hacks’ to get users from one page to another when testing to increase clicks. You’ll get visitors to the checkout with the promise your flip-flops allow people to fly, but it leads to frustration, returning products, and won’t grow your business in the long run.

What should you know about A/B testing before starting?

Your data is clean, and your flip-flop shop gets enough visitors and conversions to conclude from. But hold on a second. Let’s go over a couple of basics you need to know before starting.

Always start your A/B tests with a hypothesis

You need to know what you’re testing. Your hypothesis is your idea. Having one is like having a plan before trying something new. Besides that, it helps you learn from the results.

A basic template for hypotheses is:

Because [the reason for the change, preferably based on research or data], we expect that [the change you make], will result in [change in behavior of site visitor]. We measure by [KPI you aim to influence].

So:

“Because in ecommerce, adding reviews on the product page is a best practice. We expect that adding a review about the quality of the flip-flops above the fold on the product page will result in more trust in the product. We measure by an increase in purchases.”

Basic understanding of statistics

There are tons of tools that evaluate the results of A/B tests, like the A/B Test Calculator. Even with these tools, it’s important to understand what you’re measuring to know when your data looks off. We’re not going to dive into statistics here, but it’s important to understand two main concepts:

- Statistical significance: This indicates whether the conversion rate changed due to your doing or is a random chance. If an experiment is significant, you can assume your change affected the conversion rate. Significance is reflected by the ‘p-value.’ You don’t need to know how this is calculated, but remember that the advised benchmark for this number is 0.1 or lower. In most A/B testing tools, the p-value is reflected as the ‘confidence rate,’ which should be 90% or higher.

- Observed power: Power tells if a test correctly identifies the change in conversion, giving you confidence in the results. It’s like a radar scanning the data landscape, ensuring that significant findings are not missed due to small sample sizes or other limitations. The power must be at least 80%+.

You can calculate the p-value and observed power with an A/B Test Calculator.

As mentioned before, there are a lot of tools out there. We’re using Convert at Yoast, which we’re quite happy with. But it starts at $350 per month, which might not fit your budget. I won’t recommend a specific tool, but keep these four points in mind when choosing:

- Ease of use: If you don’t have (many) developers, choose a tool that is easy to implement and has a visual editor.

- Reliable data: Cheap tools are available, but make sure the way they track your data is reliable. You can find this in the reviews of Trustpilot, Capterra, or G2.

- Support: It’s easy to break your website when you’re experimenting. Knowledgeable support has often saved my life, especially since I’m not a developer. Good support helps troubleshoot, pick the right testing method, and set up the experiment.

- Integrations: good integrations make your life easier. For instance, Convert integrates with Hotjar and GA4, which makes it easy to segment data on those platforms and evaluate your experiments.

- Security: Verify that the tool adheres to industry data privacy and security standards, especially if you collect sensitive user information during experiments.

Assure you have clean data

As mentioned, you must have clean data to evaluate your experiments. If you’re collecting faulty data and it’s reporting more purchases than you’re getting, you can make the wrong decisions. Here are a couple of tips:

- Cross-check the number of purchases from your data tools with your actual conversions from your CRM system.

- Don’t use GA4 data to calculate whether your experiment is a winner. You need the exact numbers and as GA4 samples all data, it’s unreliable.

- Check if you can set up your A/B testing tool to track all the main metrics, such as site visitors, signups, form completions, and purchases.

Don’t run tests longer than four weeks

Tests shouldn’t run longer than four weeks because of the potential expiration of cookies. The most popular browsers reset cookies after four weeks, which could lead to inconsistencies in data collection and potentially skew the results. Keeping tests within a shorter timeframe helps ensure the reliability and accuracy of the data you collect.

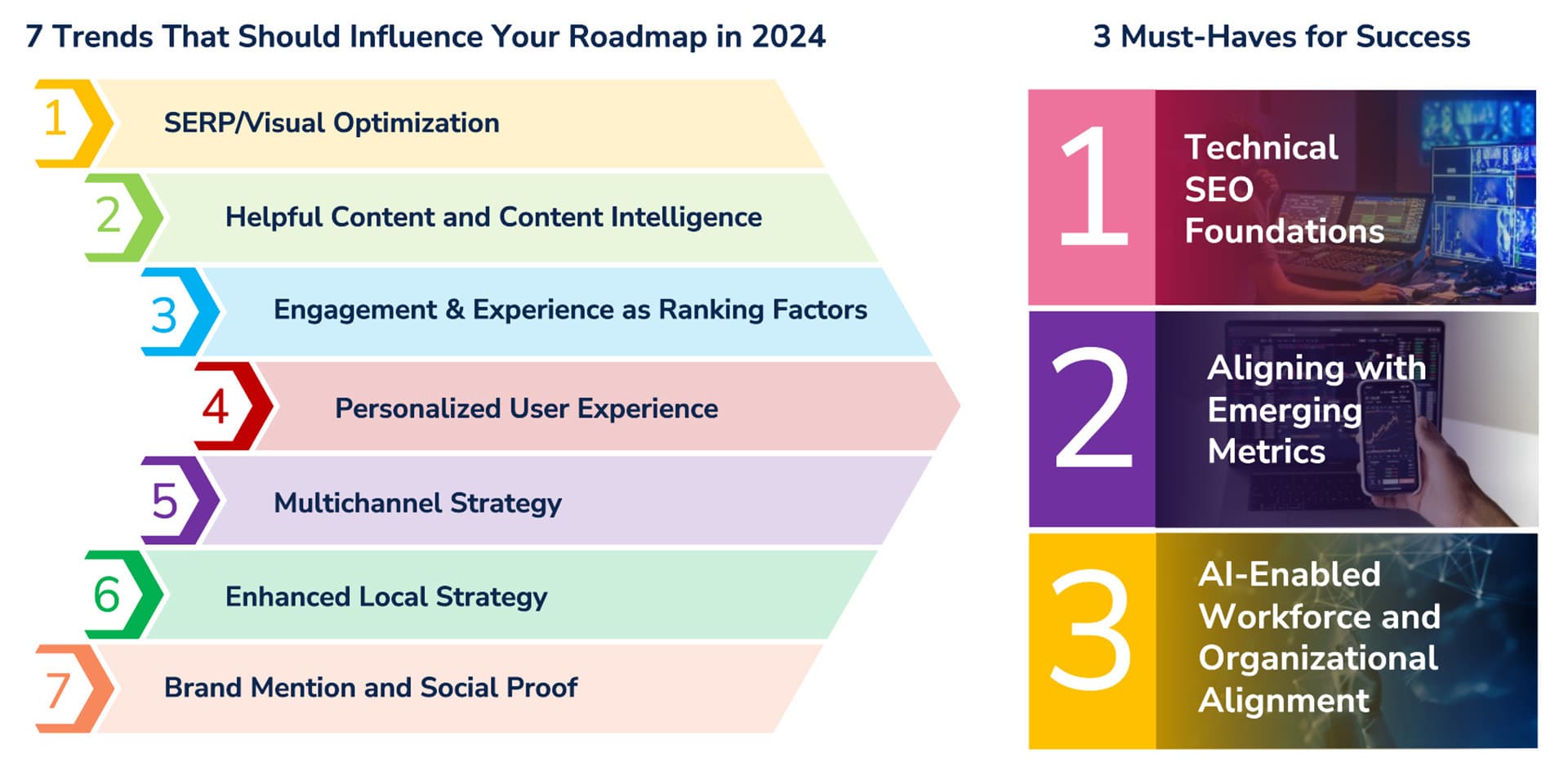

Pitfalls of A/B testing for SEO

At Yoast, we advise looking holistically at your website. When optimizing for conversion, you should also keep SEO in mind. Let’s go over a couple of pitfalls:

Hindering site performance

If your A/B testing tool is not optimized or tests are not properly configured, they can introduce latency and slow the overall site speed. Therefore, it’s crucial to carefully manage scripts, prioritize performance, and regularly monitor site speed metrics during A/B testing to mitigate these risks.

Forget to look at search intent

It’s essential to understand the search intent of your users before optimizing your website. Pushing users towards the wrong actions, like purchasing, when seeking informational content leads to a disjointed user experience. You can use Semrush to explore the search intent of keywords and ensure your content stays relevant to your audience’s needs. Align your optimization efforts with user intent. This ensures a smoother user journey and increases the relevance of your content to your target audience’s needs.

Over-optimize for conversion rate

One common pitfall is over-optimizing for conversion rate at the expense of user experience. Focusing solely on driving users towards conversions bypasses the primary goal: having high-quality content and helping users. Tunnel vision on conversion rate optimization can lead to a poor user experience, ultimately harming your website’s rankings and credibility.

Alternatives to A/B testing

So, what do you do if you don’t have enough traffic to experiment? Are you obligated to do everything by gut feeling? Luckily not. There are multiple things you can do.

Invest in SEO

The most sustainable approach is to invest in Search Engine Optimization (SEO). By optimizing your website for search engines, you can increase organic traffic over time. With more visitors to your site, you’ll have a larger pool of users to conduct A/B tests, allowing for more reliable results and informed decision-making. Prioritizing SEO enhances visibility and lays a solid foundation for future experimentation and growth. If you want to learn how to effectively increase your organic traffic, sign up and get our weekly SEO tips.

Use paid traffic

The quickest — but most expensive — way to start with A/B testing is to boost your traffic with advertisement. I wouldn’t recommend this for a longer period, but it can help validate a hypothesis more quickly. Keep in mind that it’s not the same audience. What works for paid doesn’t necessarily work for organic traffic.

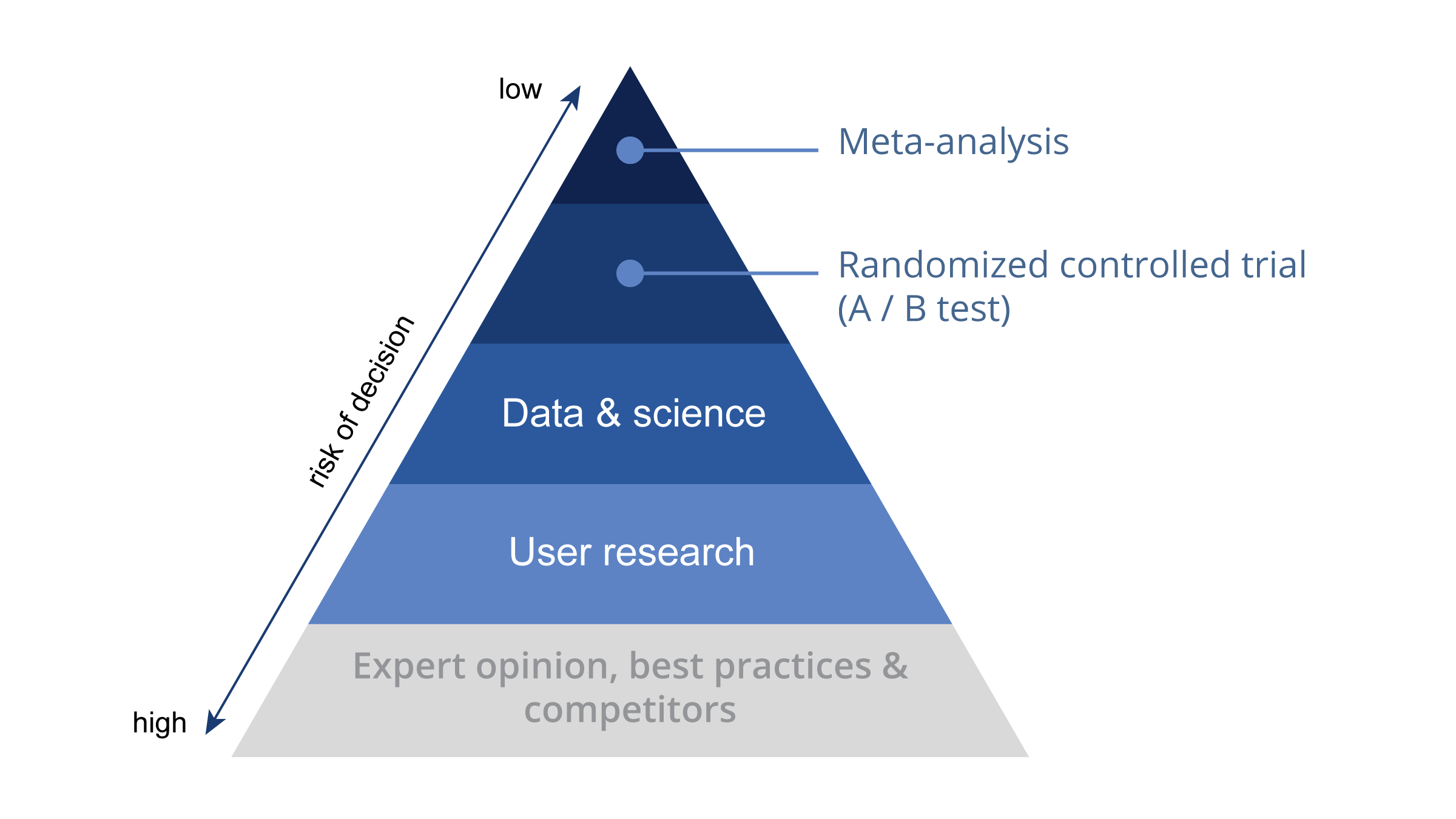

Use different validation methods

There are other ways to improve conversion when you can’t get enough traffic, but these only have a slightly higher decision risk. This can be best explained based on the pyramid of evidence. This model comes from science and was introduced to conversion rate optimization by Ton Wesseling, founder of Online Dialogue.

The higher the pyramid, the less bias and the lower the risk of decision. A/B testing is high up there, as making decisions based on A/B tests poses a low risk. Still, if you don’t have the data for the A/B test, it is better to use data (like GA4) or user research (surveys). It’s not as waterproof, but it beats that gut feeling.

The important thing is to start with A/B testing

I’m going to quote Nike here: Just do it! Like with SEO, you need to start small. Yes, your data needs to be clean. Yes, you need enough conversions. But you also need to start and learn from your mistakes. Let Yoast SEO help you get enough organic site traffic to test and start A/B testing. You’ll grow your business and/or show your manager you’re as smart as you think.

Good luck!