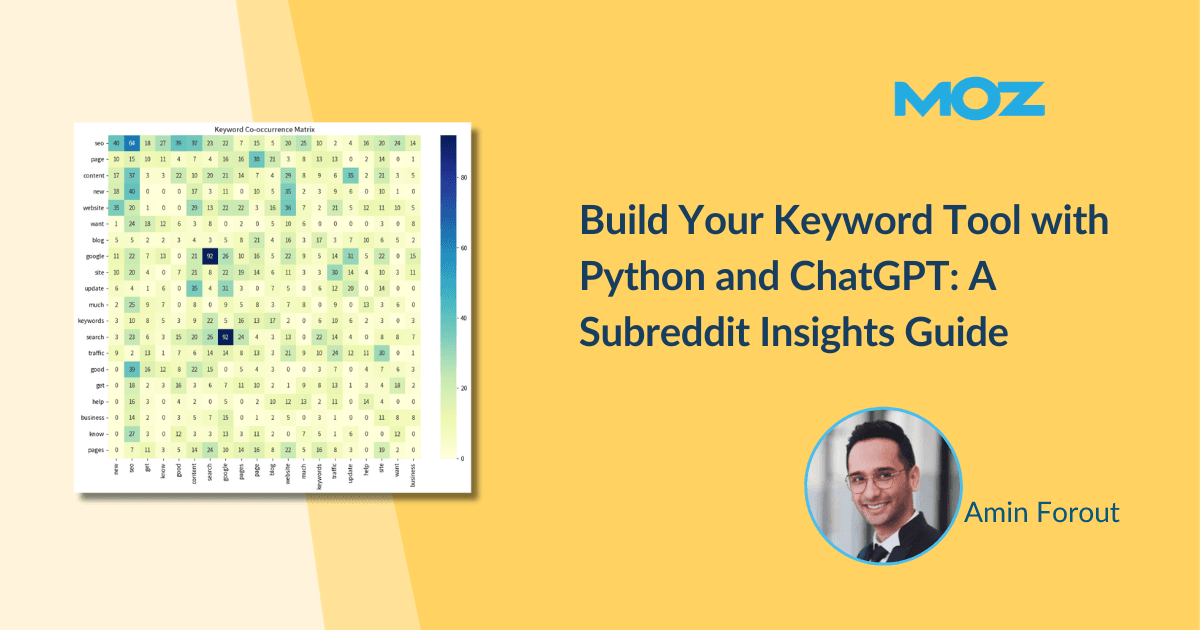

Here, you will learn how to direct ChatGPT to extract the most repeated 1-word, 2-word, and 3-word queries from the Excel file. This analysis provides insight into the most frequently used words within the analyzed subreddit, helping to uncover prevalent topics. The result will be an Excel sheet with three tabs, one for each query type.

Structuring the prompt: Libraries and resources explained

In this prompt, we will instruct ChatGPT to read an Excel file, manipulate its data, and save the results in another Excel file using the Pandas library. For a more holistic and accurate analysis, combine the “Question Titles” and “Question Text” columns. This amalgamation provides a richer dataset for analysis.

The next step is to break down large chunks of text into individual words or sets of words, a process known as tokenization. The NLTK library can efficiently handle this.

Additionally, to ensure that the tokenization captures only meaningful words and excludes common words or punctuation, the prompt will include instructions to use NLTK tools like RegexpTokenizer and stopwords.

To enhance the filtering process, our prompt instructs ChatGPT to create a list of 50 supplementary stopwords, filtering out colloquial phrases or common expressions that might be prevalent in subreddit discussions but are not included in NLTK’s stopwords. Additionally, if you wish to exclude specific words, you can manually create a list and include it in your prompt.

When you’ve cleaned the data, use the Counter class from the collections module to identify the most frequently occurring words or phrases. Save the findings in a new Excel file named “combined-queries.xlsx.” This file will feature three distinct sheets: “One Word Queries,” “Two Word Queries,” and “Three Word Queries,” each presenting the queries alongside their mention frequency.

Structuring the prompt ensures efficient data extraction, processing, and analysis, leveraging the most appropriate Python libraries for each phase.

Tested example prompt for data extraction with suggestions for improvement

Below is an example of a prompt that captures the abovementioned points. To utilize this prompt, simply copy and paste it into ChatGPT. It’s essential to note that you don’t need to adhere strictly to this prompt; feel free to modify it according to your specific needs.

“Let’s extract the most repeated 1-word, 2-word, and 3-word queries from the Excel file named ‘{file-name}.xlsx.’ Use Python libraries like Pandas for data manipulation.

Start by reading the Excel file and combining the ‘Question Titles’ and ‘Question Text’ columns. Install and use the NLTK library and its necessary resources like Punkt for tokenization, ensuring that punctuation marks and other non-alphanumeric characters are filtered out during this process. Tokenize the combined text to generate one-word, two-word, and three-word queries.

Before we analyze the frequency, filter out common stop words using the NLTK library. In addition to the NLTK stopwords, incorporate an additional stopword list of 50 common auxiliary verbs, contractions, and colloquial phrases. This additional list should focus on phrases like ‘I would,’ ‘I should,’ ‘I don’t,’ etc., and be used with the NLTK stopwords.

Once the data is cleaned, use the Counter class from the collections module to determine the most frequent one-word, two-word, and three-word queries.

Save the results in three separate sheets in a new Excel file called ‘combined-queries.xlsx.’ The sheets should be named ‘One Word Queries,’ ‘Two Word Queries,’ and ‘Three Word Queries.’ Each sheet should list the queries alongside the number of times they were mentioned on Reddit.

Show me the list of the top 5 queries and their count for each group in 3 tables.”

Optimizing the number of keywords for faster output

When extracting data from many questions, consider requesting fewer keywords as output to expedite the process. For instance, if you’ve pulled data from 400 questions, you might ask ChatGPT to show you only the top 3 keywords. If you wish to view more keywords, simply download the file. This approach will reduce ChatGPT’s processing time.

Streamlining the prompt for direct output

If you continue to experience interruptions but are not interested in understanding the workflow, consider adding the following line at the end of your prompt: “No need for any explanation; just provide the output.” This directive instructs ChatGPT to focus on delivering the desired output.

Data-driven SEO insights with ChatGPT

Now, you have prepared two datasets; the first is a list of questions and their URLs, number of comments, and upvotes. Meanwhile, the second is a list of one-word, two-word, and three-word queries.

To analyze or visualize this data with ChatGPT, use the Noteable plugin or download the Excel files from the Noteable application and upload them to the ChatGPT data analysis tool. For this guide, proceed with the Noteable plugin to maintain consistency within the same chat.